Kolmogorov-Arnold Networks

2024.05.13- Date

- 2024-05-17 (16:00 ~ )

- Location

- UNIST Building 108, Room 320

***

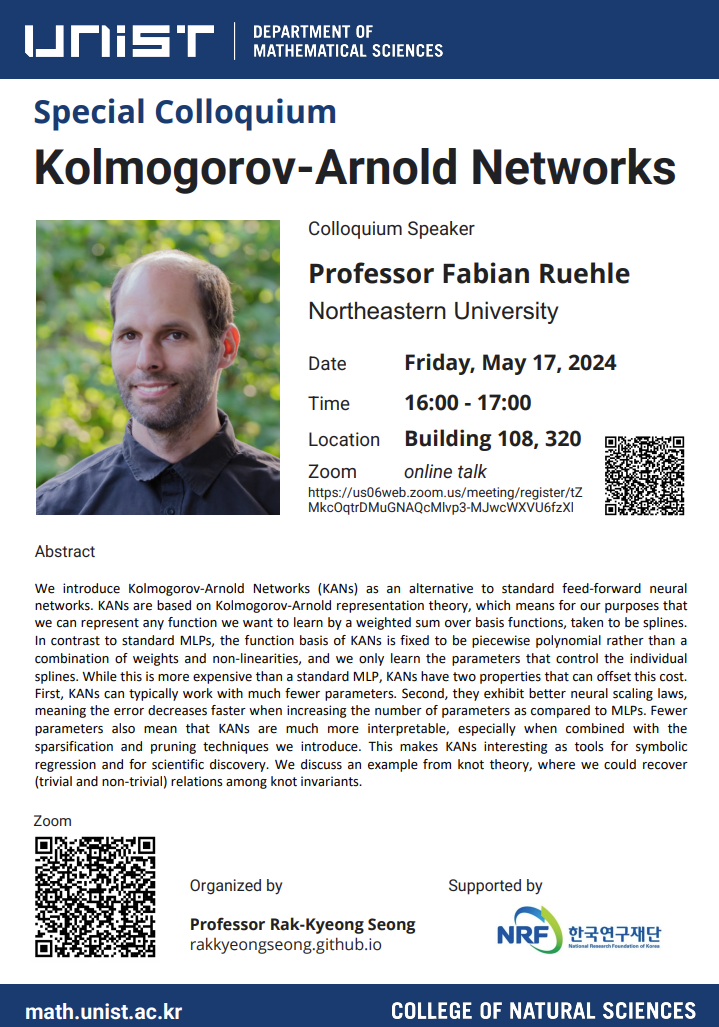

Date: May 17, 2024

Time: 16:00 – 17:00 (Korea Standard Time)

Location: UNIST Building 108, 320; and online via zoom (online talk)

Talk Title: Kolmogorov-Arnold Networks

Speaker: Prof. Fabian Ruehle (Northeastern University)

Talk Abstract:

We introduce Kolmogorov-Arnold Networks (KANs) as an alternative to standard feed-forward neural networks. KANs are based on Kolmogorov-Arnold representation theory, which means for our purposes that we can represent any function we want to learn by a weighted sum over basis functions, taken to be splines. In contrast to standard MLPs, the function basis of KANs is fixed to be piecewise polynomial rather than a combination of weights and non-linearities, and we only learn the parameters that control the individual splines. While this is more expensive than a standard MLP, KANs have two properties that can offset this cost. First, KANs can typically work with much fewer parameters. Second, they exhibit better neural scaling laws, meaning the error decreases faster when increasing the number of parameters as compared to MLPs. Fewer parameters also mean that KANs are much more interpretable, especially when combined with the sparsification and pruning techniques we introduce. This makes KANs interesting as tools for symbolic regression and for scientific discovery. We discuss an example from knot theory, where we could recover (trivial and non-trivial) relations among knot invariants.

***